Introduction to Machine Learning

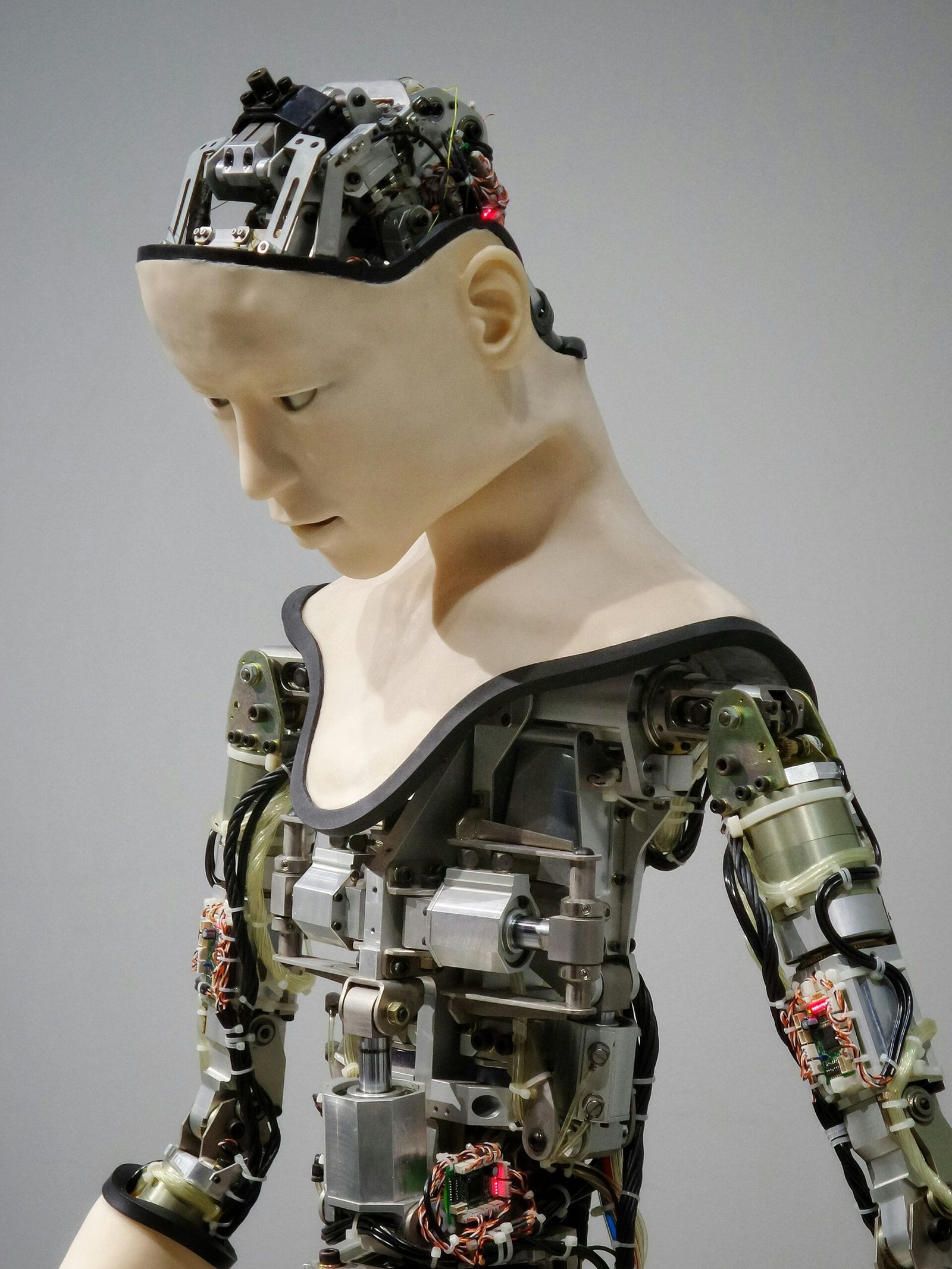

Machine learning, a subset of artificial intelligence, refers to the process by which computer systems learn from data to make predictions or decisions without being explicitly programmed for specific tasks. It utilizes algorithms and statistical models to analyze and draw inferences from patterns within data, enabling systems to improve their performance over time with experience. This paradigm shift from traditional programming to data-driven models marks a significant leap in computing capabilities.

The significance of machine learning in the modern world cannot be overstated. Its applications span a wide range of industries, from healthcare and finance to retail and technology. In healthcare, for instance, machine learning algorithms analyze vast amounts of medical data to assist in diagnostics and treatment planning. In finance, they predict market trends and detect fraudulent activities. Retailers leverage machine learning to personalize customer experiences and optimize inventory management. These examples illustrate that understanding machine learning is essential for anyone looking to engage with the cutting-edge advancements shaping our future.

The history of machine learning dates back to the 1950s when the concept of artificial intelligence first came into being, marked by Alan Turing’s seminal work on computing and intelligence. Early developments focused on rule-based systems and symbolic reasoning. However, the advent of more sophisticated algorithms, increased computational power, and the availability of massive datasets have transformed machine learning from a theoretical curiosity into a practical and indispensable tool. This evolution has been driven by innovations such as neural networks, support vector machines, and deep learning frameworks.

Today, machine learning empowers numerous everyday applications that many take for granted. These include recommendation systems on streaming platforms, voice-activated personal assistants, and even autonomous vehicles. As we delve deeper into the intricacies of machine learning, it is important to recognize its foundational principles and appreciate its pervasive impact on modern society. This understanding will set the stage for more detailed discussions on the methodologies, challenges, and future directions of this dynamic field.

Types of Machine Learning

Machine learning is broadly categorized into three primary types: supervised learning, unsupervised learning, and reinforcement learning. Each type serves unique purposes and is suited to different kinds of problems, offering diverse approaches to data analysis and prediction.

Supervised Learning

In supervised learning, the model is trained using labeled data, meaning the input data comes annotated with the correct outputs. This process allows the algorithm to learn the relationships between input variables and the target variable. A key aspect of supervised learning is the availability of a comprehensive dataset that provides clear input-output pairs for training the model.

Common algorithms used in supervised learning include linear regression, logistic regression, support vector machines (SVM), and neural networks. Practical applications of supervised learning are vast and include spam detection in email systems, where the model learns to differentiate between spam and non-spam emails, and handwriting recognition, where it can identify and classify handwritten characters based on labeled examples.

Unsupervised Learning

Unsupervised learning, in contrast, deals with data without labeled responses. The aim here is to uncover hidden patterns or intrinsic structures within the data. The model needs to decide for itself the relationships present within the data, often through clustering or association.

Common unsupervised learning algorithms include k-means clustering, hierarchical clustering, and principal component analysis (PCA). Real-world applications of unsupervised learning include market basket analysis, where retailers analyze the purchasing behaviors of customers to identify product affinities, and anomaly detection in network security systems, where unusual patterns might indicate potential threats.

Reinforcement Learning

Reinforcement learning is a type of machine learning where an agent learns to make a series of decisions by interacting with an environment. The agent receives feedback in the form of rewards or punishments, which guide its future actions to maximize cumulative rewards. This learning mimics the natural process of trial and error.

Algorithms such as Q-learning and deep reinforcement learning (often using deep Q-networks) are prominent in this field. Real-world applications include autonomous driving, where the system learns to navigate and make driving decisions, and in game playing, where agents develop strategies to win games like chess or Go by progressively improving through simulations.

Core Algorithms and Techniques

In the realm of machine learning, understanding the core algorithms and techniques provides the foundation for building competent models. One of the most fundamental algorithms is linear regression, which is used for predictive analysis. It establishes a relationship between dependent and independent variables by fitting a linear equation to observed data. This method is straightforward to implement and interpret, making it ideal for scenarios where the relationship between variables is approximately linear. The primary limitation of linear regression is its inability to capture non-linear relationships and its susceptibility to overfitting, especially in complex datasets.

Another commonly used algorithm is the decision tree. This technique splits the dataset into subsets based on the value of an attribute, forming a tree structure. Each node represents a feature, each branch represents a decision rule, and each leaf represents an outcome. Decision trees are intuitive, easy to visualize, and handle both numerical and categorical data. However, they can become overly complex and prone to overfitting if not pruned properly.

Diving into more sophisticated methods, neural networks are designed to mimic the human brain’s structure and function. They consist of interconnected nodes (neurons) arranged in layers. Neural networks are highly effective in capturing intricate patterns in data, making them suitable for tasks like image and speech recognition. Yet, their complexity demands significant computational power and vast amounts of data for training, which can be a limiting factor.

Support Vector Machines (SVMs) are another powerful technique, particularly in classification tasks. SVMs work by finding the hyperplane that best separates classes in the feature space. They are effective in high-dimensional spaces and are versatile with various kernel functions. The key downside of SVMs is their computational intensity, particularly with large datasets.

An integral part of working with these algorithms is the training and evaluation of models. Model training involves feeding the algorithm with data to learn patterns and relationships. To evaluate the performance of a model, metrics such as accuracy, precision, and recall are utilized. Accuracy measures the overall correctness of the model, precision indicates the model’s ability to identify positive results, and recall assesses its ability to capture all relevant cases. Balancing these metrics is crucial to developing robust models.

Challenges and Future Directions

Machine learning has become a cornerstone of modern technology, yet it faces significant challenges that hinder its full potential. One of the primary concerns is data privacy. As machine learning systems require vast amounts of data to function effectively, the collection and processing of this data raise serious privacy issues. Securing sensitive information and ensuring compliance with data protection regulations are persistent obstacles.

Another challenge is the necessity for large datasets. High-performance machine learning models often demand extensive and high-quality data, which can be difficult and costly to acquire. Moreover, this data must be representative of the real-world scenarios the models are expected to handle; otherwise, the models might fail in practical applications.

Bias in algorithms is a critical ethical issue within the field of machine learning. Models trained on biased data can produce unfair outcomes, perpetuating or even exacerbating societal inequalities. For instance, if a machine learning model used for hiring is trained on historical data that reflects past biases, it might favor certain demographics over others, leading to discriminatory practices. Addressing this issue requires diligent efforts to identify and mitigate biases during the data collection and model training phases.

Looking into the future, several trends and technologies promise to reshape the landscape of machine learning. Research is ongoing in developing more efficient algorithms that can work with smaller datasets or even synthetic data, reducing the dependency on vast quantities of real-world data. Advances in explainable AI are also emerging, aimed at creating models whose decision-making processes are transparent and understandable, thereby increasing trust and reliability.

Furthermore, quantum computing holds the potential to revolutionize machine learning by significantly enhancing computational capabilities, enabling more complex and faster data analysis than current classical computers allow. With continuous progress in these areas, machine learning systems are poised to become more robust, fair, and efficient.

As machine learning continues to evolve, its impact on society and various industries will only grow. From healthcare to finance, transportation to entertainment, the integration of machine learning technologies will drive innovation, provide predictive insights, and improve efficiency. Stakeholders must remain vigilant about ethical considerations, ensuring the responsible deployment of machine learning systems to build a future that benefits everyone.